NativeScript Preview - Text to Speech 🗣️

Let's experience the beauty of NativeScript Preview by exploring the implementation of Text to Speech.

A common trait found among professional software developers is their ability to not allow the implementation details of their job to become "precious".

It is easy to become enamored with a particular framework or technology and forget the primary reason we program in the first place. We do not program merely because React, Angular, Vue, Svelte, Ionic, NativeScript, TypeScript, etc. exist. We program because of what these technologies allow us to do. We program because it is the absolute best option to put something into someone's hands that will solve a real problem they are having, making their lives easier, and maybe even bring joy into their world.

NativeScript Preview can dramatically reduce the time and cognitive investment it takes to convert an idea into a valuable feature.

Let's explore a fun text-to-speech example with non-trivial implications.

The Demo

For a high-level view of how NativeScript Preview works, you can learn more here.

In a nutshell, you can install the NativeScript Preview app to scan a QR code on StackBlitz, pushing that app to your device. As you develop, your changes will be pushed to your phone in realtime. Pretty cool!

The example we want to demo is in the link below and let’s go!

The NativeScript Component

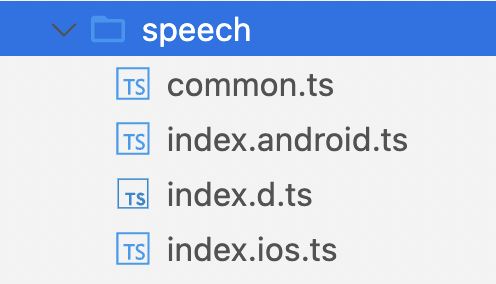

To start things off, we will segment our speech component into its own directory and follow a common pattern used within the NativeScript community. The NativeScript compiler will generate platform-specific JavaScript bundles, so you could technically mix your iOS and Android code in a single file, knowing the non-targeted platform code will be pruned for you. However a cleaner approach is to completely separate the iOS and Android code and program to a type definition that determines the API for that component regardless of the platform.

You can see what a typical file structure looks like in the image below.

You will notice an additional common.ts file in the directory, which we use to abstract common elements such as enums and interfaces. For example, in the code below, we define a Languages enum to control the languages we can use and a SpeakOptions interface for type safety.

//app/speech/common.ts

export enum Languages {

American = 'en-US',

British = 'en-GB',

Spanish = 'es-ES',

Australian = 'en-AU',

}

export interface SpeakOptions {

text: string;

language?: string;

finishedCallback?: Function;

}

Within our index.d.ts file, we will define the shape of the TextToSpeech class that we will guide each platform implementation. Finally, we will define five basic functions we will implement within the index.ios.ts and index.android.ts files.

//app/speech/index.d.ts

export * from './common';

export declare class TextToSpeech {

speak(options: SpeakOptions): void;

stop(): void;

destroy(): void;

}

iOS

In the example, we have an iOS and Android implementation, but to avoid redundancy we will focus solely on the iOS implementation. The same principles described apply to all platforms NativeScript supports.

The Native Component

We will begin our tour with a redacted version of the TextToSpeech class so you can see the high-level pieces before we step through each function individually and talk about how they work. The most important thing to notice is that this class implements the five methods we previously defined in our type definition.

//app/speech/index.ios.ts

import { SpeakOptions } from './common';

export * from './common';

export class TextToSpeech {

utterance: AVSpeechUtterance;

speech: AVSpeechSynthesizer;

delegate: MySpeechDelegate;

options: SpeakOptions;

speak(options: SpeakOptions) { }

stop() { }

destroy() { }

private init() { }

}

The utterance and speech instances are used to do the talking while the delegate and options members are used to communicate with the outside world.

We will walk through the delegate implementation in a moment. If you are unfamiliar with this concept, it is a common pattern in iOS development to use a delegate to handle specific tasks for a concrete instance, such as event dispatching. You can learn more here.

utterance: AVSpeechUtterance;

speech: AVSpeechSynthesizer;

delegate: MySpeechDelegate;

options: SpeakOptions;

The Core Methods

The speak method is pretty cut and dry. We initialize some things and then call this.speech.speakUtterance and pass in an utterance instance. But... what is really happening here?

speak(options: SpeakOptions) {

this.init();

this.options = options;

this.utterance = AVSpeechUtterance.alloc().initWithString(options.text);

this.utterance.voice = AVSpeechSynthesisVoice.voiceWithLanguage(options.language);

this.speech.speakUtterance(this.utterance);

}

We are detecting whether or not we have an instance of speech which leads us down one of two paths. If we have an instance, we do not need to re-instantiate the speech synthesizier; instead, we just need to stop any speech that may be happening. If we do not have an instance, we will instantiate an instance of AVSpeechSynthesizer and assign it to speech. We will also assign an instance of MySpeechDelegate to delegate and connect the delegate to the speech instance.

private init() {

if (!this.speech) {

this.speech = AVSpeechSynthesizer.alloc().init();

this.delegate = MySpeechDelegate.initWithOwner(new WeakRef(this));

this.speech.delegate = this.delegate;

} else {

this.stop();

}

}

The stop method detects if speaking is in progress, and if so, it instructs the AVSpeechSynthesizer instance to stop speaking immediately.

stop() {

if (this.speech?.speaking) {

this.speech.stopSpeakingAtBoundary(AVSpeechBoundary.Immediate);

}

}

By setting the speech to null, we are ensuring the AVSpeechSynthesizerinstance is cleaned up.

destroy() {

this.speech = null;

}

The Delegate

To learn more about iOS delegates, this resource is a good read.

In a nutshell, "Delegation is a design pattern that enables a class or structure to hand off (or delegate) some of its responsibilities to an instance of another type.""

The delegate class can come in several forms based on what you are trying to accomplish and will be defined by the interface you implement. In our case, we are implementing the AVSpeechSynthesizerDelegate interface, which requires us to implement the methods you see below. NativeScript makes the actual connection via the static ObjCProtocols property which can take a collection of any number of iOS protocols.

Let us take a moment and talk about how this delegate is being instantiated.

@NativeClass

class MySpeechDelegate extends NSObject implements AVSpeechSynthesizerDelegate {

owner: WeakRef<TextToSpeech>;

static ObjCProtocols = [AVSpeechSynthesizerDelegate];

static initWithOwner(owner: WeakRef<TextToSpeech>) {

const delegate = <MySpeechDelegate>MySpeechDelegate.new();

delegate.owner = owner;

return delegate;

}

speechSynthesizerDidStartSpeechUtterance() {}

speechSynthesizerDidFinishSpeechUtterance() {}

speechSynthesizerDidPauseSpeechUtterance() {}

speechSynthesizerDidContinueSpeechUtterance() {}

speechSynthesizerDidCancelSpeechUtterance() {}

}

The static initWithOwner pattern is also pretty common among iOS development due to it's heavy usage of the delegate design pattern.

We are defining a WeakRef reference to our TextToSpeech instance and assigning that to the owner property on our delegate. By using WeakRef, we can hold a weak reference to another object without preventing that object from getting garbage-collected. You can read more about this feature in the WeakRef MDN Documentation.

The delegate itself is created within the static initWithOwner method, which takes an owner parameter that gets assigned to the delegate instance after we have called MySpeechDelegate.new(). A unique NativeScript construct here is the usage of .new() which is merely a convenience added to the JavaScript language by NativeScript's runtime which helps instantiate native classes where you may not otherwise have a specific initializer to call. Once the delegate has been created and the owner assigned, we return the delegate back to the owner for future delegation-related responsibilities.

The one method that is going to do the "real work" in our delegate is speechSynthesizerDidFinishSpeechUtterance which not only interacts with the SpeechToText instance but the callback method that the Angular app passes in via the SpeakOptions object. We are going to try to get a reference to the owner by calling this.owner?.deref(); and if we have an instance, we will call stop and the finishCallBack method that comes from the speech options object that is defined by the Angular app.

speechSynthesizerDidFinishSpeechUtterance(

synthesizer: AVSpeechSynthesizer,

utterance: AVSpeechUtterance

) {

const owner = this.owner?.deref();

if (owner) {

owner.stop();

owner.options.finishedCallback();

}

}

The other four methods will simply log the event and utterance.speechString. We could fill this in later, depending on the user requirements.

speechSynthesizerDidStartSpeechUtterance(

synthesizer: AVSpeechSynthesizer,

utterance: AVSpeechUtterance

) {

console.log(

'speechSynthesizerDidStartSpeechUtterance:',

utterance.speechString

);

}

speechSynthesizerDidPauseSpeechUtterance(

synthesizer: AVSpeechSynthesizer,

utterance: AVSpeechUtterance

) {

console.log(

'speechSynthesizerDidPauseSpeechUtterance:',

utterance.speechString

);

}

speechSynthesizerDidContinueSpeechUtterance(

synthesizer: AVSpeechSynthesizer,

utterance: AVSpeechUtterance

) {

console.log(

'speechSynthesizerDidContinueSpeechUtterance:',

utterance.speechString

);

}

speechSynthesizerDidCancelSpeechUtterance(

synthesizer: AVSpeechSynthesizer,

utterance: AVSpeechUtterance

) {

console.log(

'speechSynthesizerDidCancelSpeechUtterance:',

utterance.speechString

);

}

Angular

The Angular portion of the application maintains the status quo by sticking to convention. It is pretty awesome to have the ability to interface with native platform APIs using JavaScript and equally awesome to be able to abstract those implementation details away so that Angular is oblivious to the brave new world it is living in. As far as the Angular application is concerned, it's just Angular.

The Angular Component Class

The one thing we are doing to do is tighten up the connection between Angular and the hardware by using NgZone to ensure that our Angular bindings update if a native event is triggered.

import { Component, OnInit, inject, NgZone } from '@angular/core';

import { Languages, TextToSpeech, SpeakOptions } from './speech';

@Component({

selector: 'ns-talk',

templateUrl: './talk.component.html',

})

export class TalkComponent implements OnInit {

TTS: TextToSpeech;

ngZone = inject(NgZone);

speaking = false;

speechText = 'Thank you for exploring NativeScript with StackBlitz!';

speakOptions: SpeakOptions = {

text: 'Whatever you like',

language: Languages.Australian,

finishedCallback: () => {

this.reset();

console.log('Finished Speaking!');

},

};

ngOnInit() {

this.TTS = new TextToSpeech();

}

talk() { }

stop() { }

reset() { }

}

If you remember, in the previous section, we fired a callback function in the delegate. You can see that function is defined on the speakOptions object, which calls reset and logs to the console. We are also instantiating a TextToSpeech instance inside ngOnInit and assigning it to TTS.

The talk method does some preliminary work by assigning speechText to the speakOptions.text options and setting the speaking flag to true. Finally, TTS.speak is called with the updated options.

talk() {

this.speakOptions.text = this.speechText;

this.speaking = true;

this.TTS.speak(this.speakOptions);

}

The stop method calls the stop method on the TTS instance and also calls reset.

stop() {

this.TTS.stop();

this.reset();

}

The reset method is interesting because it uses NgZone to manage change detection. Everytime reset is called, ngZone.run is called, which then fires a callback function which sets speaking to false.

reset() {

this.ngZone.run(() => {

// since stop can be called as result of native API interaction

// we call within ngZone to ensure view bindings update

this.speaking = false;

});

}

The Angular Component Template

Assuming a basic level of Angular understanding, there is not much to discuss about this component template. We have a [(ngModel)] to update speechText which is used to update the options we pass to our platform native API when the talk button is tapped.

<ActionBar title="Text to Audible Speech"> </ActionBar>

<StackLayout class="p-30">

<Label

text="Be sure to turn your speaker on and volume up."

class="instruction"

textWrap="true"

width="250"

></Label>

<TextView

[(ngModel)]="speechText"

hint="Type text to speak..."

class="input"

height="100"

></TextView>

<Button

text="Talk to me!"

class="btn-primary m-t-20"

(tap)="talk()"

></Button>

<Button

text="Stop"

(tap)="stop()"

[visibility]="speaking ? 'visible' : 'hidden'"

class="btn-secondary"

></Button>

</StackLayout>

In Conclusion

It's always a neat moment when developers experience realtime updates from Stackblitz to their phone and realized how empowering native APIs can be. The goal is for it to feel like every JavaScript application you've already written.

We hope you enjoyed the text to speech demo.